Despite the gender and racial biases inherent in their programming, artificial intelligence (AI) has seeped into every facet of life and is in the early stages of dictating who gets a job, home or car loan, adequate healthcare, and even who might break the law.

With Google Gemini’s misrepresentation of U.S. senators as Black Americans and Native Americans from the 1800s serving as a prime example, the economic and livelihoods of individuals may be severely impacted, for better or worse, and are estimated to result in multibillion-dollar economic losses every year.

This issue is compounded by misrepresentations and inaccuracies, and the future is uncertain as more AI safety researchers from OpenAI resign or are terminated each week.

Regardless of what position you hold or whether or not you’re a part of a marginalized group, mitigating the consequences of discriminatory AI is up to each and everyone one of us — it’s our duty to steer public policies towards an equitable future through a greater understanding of the implications of such technology, and — as large language models (LLMs) are trained on human-written text — our own biases as well.

AI’s Impact on Employment (Hiring Preferences & Biases)

Countless studies show a looming – and valid – concern about how AI data interprets human-biases it’s trained on, and the implications of its trustworthiness, or lack thereof.

Professor Rangita de Silva de Alwis at the University of Pennsylvania Carey Law School studied this problem closely in her report ‘The Elephant in AI’. She looked into employment platforms using perceptions from 87 Black students and professionals while also analyzing 360 online professional profiles. The aim of the study was to understand how AI-driven platforms are contributing to anti-Black bias.

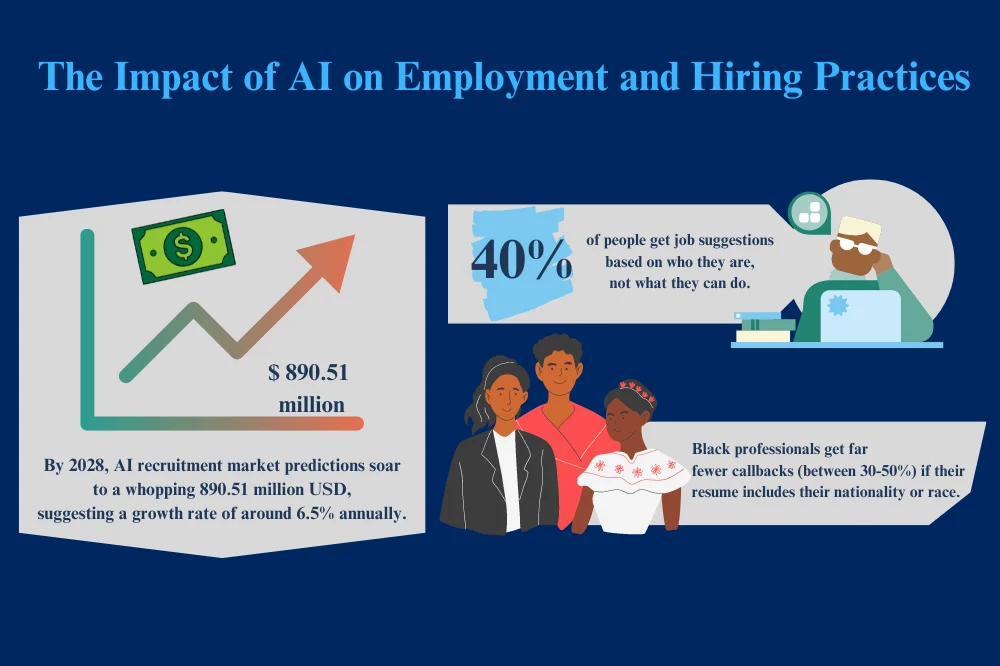

The study concluded that about 40% of people get suggestions based on their skin color rather than their skillset. Additionally, 30% receive job alerts that fail to match their talent or experience.

What’s more, Black professionals get far fewer callbacks (between 30-50%) if their resume includes their nationality or race. Exams like LSATs also appear biased against minority groups trying to find work.

Furthermore, news company Reuters talked about how Amazon stopped using an AI system for hiring in 2018 because the system showed clear preference to men.1

The AI learned from resumes Amazon received over the previous ten years and, since most of these were from men, the AI concluded that men were better candidates than women — following the example of the biased humans who had done the initial hiring.

AI will continue to grow exponentially; Facts & Factors shows that the AI recruitment market was already worth 610.3 million USD in 2021.2 By 2028, predictions soar to a whopping 890.51 million USD, suggesting a growth rate of around 6.5% annually. With these forecasts in mind, it’s all the more apparent how dire it is that we ensure these AI programs are running equitably.

Housing Rejection: Racial Discrimination Leads to Home Loan Denials for Black Americans

Researchers Leying Zou and Warut Khern-am-nuai discovered that racial bias played a part in past mortgage applications in the U.S.

Studies show that all too commonly, Black applicants got rejected for loans more frequently than white applicants under similar conditions. Zou and Khern-am-nuai found that using ordinary machine learning models actually made this racial bias even stronger than their human counterparts when it came to approving or rejecting a loan application.

To hit this point home, the US National Bureau of Economic Research revealed similar trends in a recent study.3 They submitted applications to over 8,000 landlords with bots bearing names of various demographics, and the results showed undeniable discrimination against renters of color – especially Black Americans.

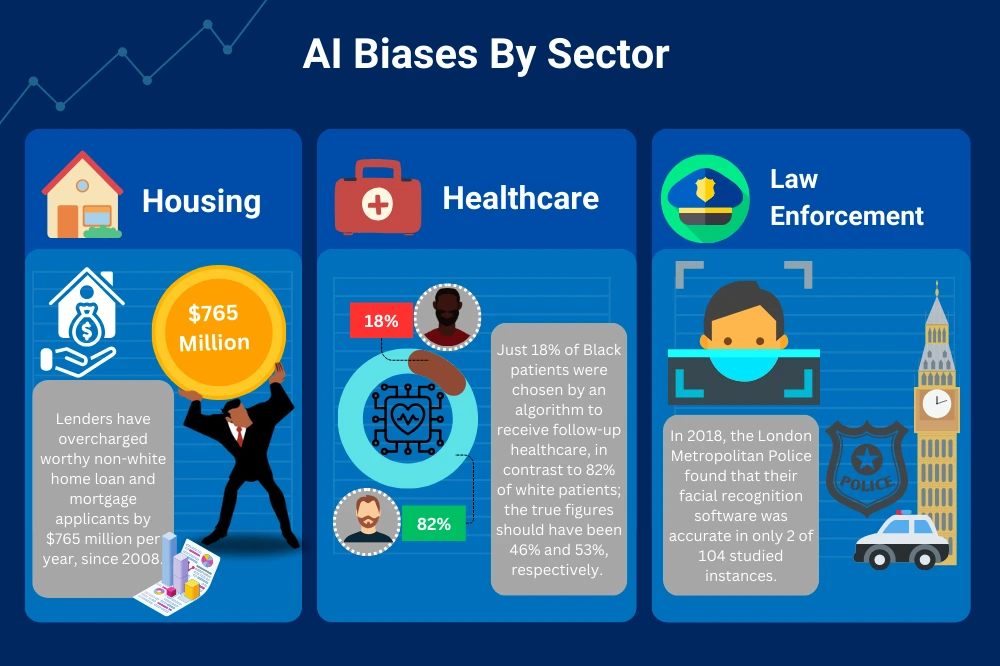

On top of being more likely to be unfairly denied, non-white Americans are far more likely to face unjustifiably steep housing loan and mortgage rates — to the tune of $765 million annually between 2008 and 2015.

Researchers at MIT have found a method that removes multiple biases out of a mortgage lending dataset, heightening accuracy and enhancing equality in machine-learning models trained on these datasets.

These examples emphasize the urgent need for careful management and observational practices when it comes to employing AI systems in mortgage schemes so as to maintain equity and prevent human-like biases. The complexity of such an ever-changing AI landscape and usage demands constant research and scrutiny of every detail pertaining to this issue.

AI Decides Who Is Worthy of Quality Healthcare

A report from the Journal of the American Medical Association reviewed over 70 publications that checked how well doctors diagnosed compared to digital algorithms and AI across different medical fields.4 Most of the data used to teach these AIs came from just three states: California, New York, and Massachusetts, pointing out a lack of variety in the training data for these algorithms.

Unsurprisingly, AI issues have crept into the healthcare industry and the potential economic loss of faulty diagnoses, harmful treatments, as well as costly and unnecessary tests can raise the cost of (already wildly expensive) healthcare.

The Yale Journal of Health Policy, Law & Ethics published an article outlining this conundrum; it delves into algorithmic systems that single out people for ‘high-risk care management’ programs, concluding that these algorithms often overlooked racial minorities to be recommended for healthcare services, thus leaving them without adequate medical attention and care.

The study also highlights that this kind of bias can break laws designed to protect civil rights like Title VI and Section 1557 of the Affordable Care Act, especially if it continues to single out individuals based on their ethnicity or race.5

The NCHIM Foundation also states that the use of algorithms to spot patients with complicated health issues may have some racial bias. Their research shows that Black and white patients get the same risk score from an algorithm, even when Black patients are significantly sicker.

The algorithm they studied used past healthcare spending for scoring risks and — as you might guess by now — because of the already prevalent racial differences in the healthcare system, Black patients spend less (and generally have less to spend) on healthcare than white patients at similar health levels and are therefore often overlooked.

The research concluded that Black patients having multiple health problems do not always get the help they need. They’re 26.3% more likely to have long-term illnesses than white patients. The care management program is supposed to pick up these patients, yet only 17.7% of Black patients are chosen for the program.

If all was fair and equal, this number would be much higher, standing close to 46.5%, which indicates there’s an inherent bias in the system.

With clear similarities to the Yale article mentioned above, another study headed by Sendhil Mullainathan at the University of Chicago found that racial biases were found in AI programs that determine which patients quality for follow-up care.

In fact, while 82% of white patients were deemed eligible for this ongoing care, a mere 18% of Black patients were chosen by the algorithm. This stark contrast is even more shocking when it’s revealed that, given an unbiased review, the real numbers should have been 46% of Black patients and 53% of white patients receiving this continuation of healthcare.

Racism & Xenophobia: What Law Enforcement Agencies & AI Have in Common

Police agencies also use AI to improve their work, but the data used to make the AI can be unfair towards certain groups, particularly minority communities and People of Color.

Take PredPol (a popular tool predicting crime hotspots based on AI) for instance. Research from 2016 noted that the use of this predictive policing software caused law enforcement to over-police some areas and led to an unjustified confrontation with — and accusation of — a Black American, Robert McDaniel.6

As one might imagine, these officers began conducting far more stops in non-white communities and contributing to an atmosphere of distrust of officers due to their overreach.

Another study in 2018 found that using PredPol would cause Latino and Black neighborhoods in Indianapolis to face more police presence than white communities did by up to 4 times and 2.5 times, respectively. Many other similar tools show this pattern as well, displaying the bias within AI systems.

Predictive policing algorithms can often cost a lot despite their poor performance. The city of Chicago stopped using its $2 million police prediction program because it didn’t improve results. Palo Alto also broke off a deal for this sort of system when it noticed the software wasn’t even reducing crime rates. Los Angeles gave up on using these computer-based programs after a decade-long trial period led to no significant drop in crime.

Recently, some police forces have started using facial recognition tech to solve crimes, but these systems displayed increased racism and xenophobia, as highlighted by the UN Committee on the Elimination of Racial Discrimination. Yale University showed that Detroit’s use of facial recognition wrongfully accused people 96% of the time, leading to an overwhelming number of wrongful arrests of Black residents.7

Similarly, the facial recognition program used by the London Metropolitan Police force in 2018 was found to be accurate a measly 2 in 104 times — an even lower success rate than the software used in Detroit.

Another study highlights that tests even show different levels of accuracy among races, stating that it was more likely to give false positives for Black women than other groups.

Specifically, as found in the study performed by Professor Rangita de Silva de Alwis and outlined in “The Elephant in AI” (mentioned and linked above), facial recognition software was wrong 34.4% more often when analyzing the faces of women with darker skin tones than that of men with lighter skin pigmentation.

Due to these concerns about privacy and erroneous identifications, San Francisco decided to stop police from using AI for facial recognition.

Suggested Improvements & Removal of Implicit Bias in the Field of AI

Making AI less biased must be prioritized globally, from citizens and companies to policy makers, since source data contains the same biases as us — which is often too narrow and imbalanced.

It’s crucial that governments, corporations, and those who train these intelligence programs do so as objectively and unbiased as possible to maintain – or gain – virtue, transparency, and public trust. To achieve this, it’s best to look carefully and critically at hidden biases and issues with data sampling. And of course, global regulation.

As demonstrated by the data above and below, these biases are pervasive across various sectors, underscoring the urgent need for more inclusive and unbiased AI systems.

An interesting study points out a problem, though: if access of AI systems to certain vital information is locked, it could lead to even more bias in its decisions.8 This happens because, without full details or data to study, the AI is likely to make wrong guesses based on incomplete information.

The researchers who conducted this study offer some solutions for such challenges. The authors propose training AI on large and inclusive datasets, which will help lessen these errors and issues. Combating AI’s tendencies to discriminate – especially among minority groups and demographics most discriminated against already – requires both careful planning and constant oversight by virtuous leaders and honest system trainers.

On a brighter note, Oxford University scientists have also created a tool to find out if there’s bias in AI systems.9 Amazon is already using this tool in its own new program, called ‘Amazon SageMaker Clarify,’ for clients of Amazon Web Services. The tool looks for discrimination in AI and machine learning systems, especially where characteristics like race, gender, age, and disabilities overlap.

Congresswomen Maxine Waters has also penned a letter to the Government Accountability Office (GAO) to express concern about the charted biases AI is already exhibiting in the housing sector — from loan and mortgage rates to rental application approvals or denials — and to request that the GAO take these red flags seriously and research better, more just practices.

An example Waters uses in her letter is that real estate companies are offering far more services in predominantly white areas and far fewer, if any, in regions populated by mainly non-white residents; another cited example is that houses in mostly-Black neighborhoods are consistently proven to be undervalued when rated by an automated valuation model (AVM) — a type of AI that estimates real estate values.

Thus, while AI can positively impact society, it’s important to tackle aspects that are proven to be discriminatory. Continuous oversight and regulation of such systems are essential for ensuring that AI systems treat everyone fairly and bring benefits for all.

References

1Reuters. (2018, October 10). INSIGHT-Amazon scraps secret AI recruiting tool that showed bias against women. Retrieved March 28, 2024, from <https://www.reuters.com/article/idUSL2N1WP1RO/>

2Facts and Factors. (2022, September 14). Global AI Recruitment Market Share Is Expected To Grow At A CAGR Of 6.5% By 2028. Facts & Factors. Retrieved March 28, 2024, from <https://www.fnfresearch.com/news/global-ai-recruitment-market>

3National Bureau of Economic Research. (2021, November). Racial Discrimination and Housing Outcomes in the United States Rental Market. NBER Working Paper Series. Retrieved March 28, 2024, from <https://www.nber.org/system/files/working_papers/w29516/w29516.pdf>

4Jama Network. (2020, September 22/29). Geographic Distribution of US Cohorts Used to Train Deep Learning Algorithms. Retrieved March 28, 2024, from <https://jamanetwork.com/journals/jama/article-abstract/2770833>

5Office of the Legislative Counsel. (2010, June 9). Compilation of Patient Protection and Affordable Care Act. Retrieved March 28, 2024, from <https://housedocs.house.gov/energycommerce/ppacacon.pdf>

6Royal Statistical Society. (2016, October 7). To predict and serve? Retrieved March 28, 2024, from <https://rss.onlinelibrary.wiley.com/doi/full/10.1111/j.1740-9713.2016.00960.x>

7Yale Law School. (2024). Algorithms in Policing: An Investigative Packet. Retrieved March 28, 2024, from <https://law.yale.edu/sites/default/files/area/center/mfia/document/infopack.pdf>

8Harvard Business Review. (2023, March 6). Removing Demographic Data Can Make AI Discrimination Worse. Retrieved March 28, 2024, from <https://hbr.org/2023/03/removing-demographic-data-can-make-ai-discrimination-worse>

9Oxford Internet Institute. (2021, April 21). AI modelling tool developed by Oxford academics incorporated into Amazon anti-bias software. Retrieved March 28, 2024, from <https://www.oii.ox.ac.uk/news-events/ai-modelling-tool-developed-by-oxford-academics-incorporated-into-amazon-anti-bias-software-2/>